City University of Hong Kong (CityU) led a research that developed an artificial visual system that consumes low power to imitate the human brain. During an experiment, it successfully carried out some data-intensive cognitive tasks. The results of that experiment could provide the incoming generation of artificial intelligence applications with an auspicious device system.

The leader of the research team is Professor Johnny Chung-yin Ho, Associate Head and Professor of the Department of Materials Science and Engineering (MSE) at CityU. The findings from their research was published in the scientific journal ‘Science Advances’ with the title as, ‘Artificial Visual System Enabled by Quasi-Two-Dimensional Electron Gases in Oxide Superlattice Nanowires’.

As the innovations in semiconductor technologies utilized in digital computing are being sluggish, the brain-like computing systems are being considered as a substitute for the future. Various scientists have been trying to build the next-gen AI computers which will be very portable, energy-efficient and can adapt the way the human brain does.

‘Unfortunately, effectively emulating the brain’s neuroplasticity— the ability to change its neural network connections or re-wire itself— in existing artificial synapses through an ultralow-power manner is still challenging’, Professor Ho said.

Artificial synapse is an artificial rendition of synapse i.e., the space across which two neurons go through electrical signals to interact with each other in the brain. It is a device that emulates the efficient neural signal transmission and formation of memory process of the brain.

In order to improve the energy efficiency of the artificial synapses, Professor Ho and his research team introduced quasi-two-dimensional electron gases (called quasi-2DEGs) into artificial neuromorphic systems. By using oxide superlattice nanowires— a type of semiconductor with fascinating electrical properties— that they developed, they designed the quasi-2DEG photonic synaptic devices which has attained a record-low energy consumption down to sub-femtojoule (0.7Fj) per synaptic event. This means a 93% decrease in energy consumption when likened with synapses in the human brain.

‘Our experiments have demonstrated that the artificial visual system based on our photonic synapses could simultaneously perform light detection, brain-like processing and memory functions in an ultralow-power manner. We believe our findings can provide a promising strategy to build artificial neuromorphic systems for applications in bionic devices, electronic eyes, and multifunctional robotics in future’, Professor Ho said.

He clarified that a two-dimensional electron gas occurs when electrons are restricted to a two-dimensional interface between two different materials. Since there are no electron-electron interactions and electron-ion interactions, the electrons move without restrictions in the interface.

When it is exposed to light pulse, a chain of reactions between the oxygen molecules from environment absorbed onto the nanowire surface and the free electrons from the two-dimensional electron gases inside the oxide superlattice nanowires are induced. Therefore, the conductance of the photonic synapses would change. Given the outstanding charge carrier mobility and sensitivity to light stimuli of superlattice nanowires, the change of conductance in the photonic synapses resembles that in biological synapse. Hence, the quasi-2DEG photonic synapses can imitate how the neurons in the human brain transmit and memorize signals.

‘The special properties of the superlattice nanowire materials enable our synapses to have both the photo-detecting and memory functions simultaneously. In a simple word, the nanowire superlattice cores can detect the light stimulus in a high-sensitivity way, and the nanowire shells promote the memory functions. So there is no need to construct additional memory modules for charge storage in an image sensing chip. As a result, our device can save energy’, Professor Ho explained.

With the quasi-2DEG photonic synapse, they have built an artificial visual system which can detect with accuracy and efficiency a patterned light stimulus and ‘memorize’ the shape of the stimuli for an hour. ‘It is just like our brain will remember what we saw for some time’, Professor Ho described.

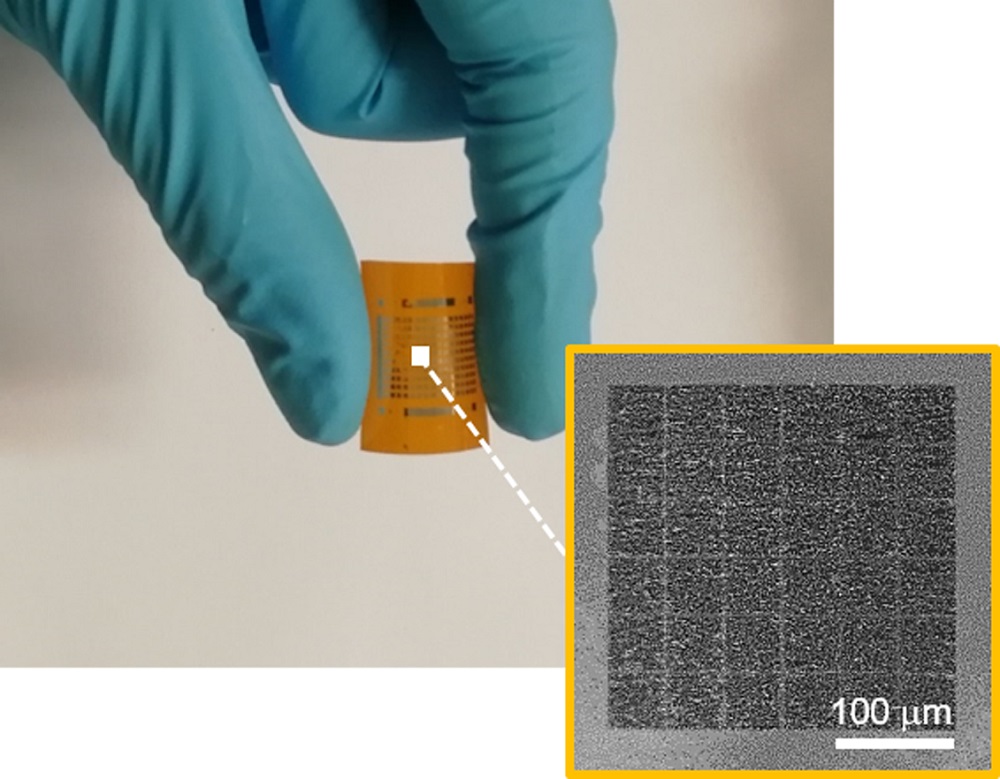

He also added that the way the team synthesized the photonic synapses and the artificial visual system did not need complicated equipment. The devices could be made on flexible plastics in a manner that doesn’t cost much.

The corresponding author of the paper is Professor Ho. The co-first authors are Meng You and Li Fangzhou, PhD students from MSE at CityU. Some other team members include Dr Bu Xiuming, Dr Yip Sen-po, Kang Xiaolin, Wei Renjie, Li Dapan and Wang Fei, all in CityU. The collaborating researchers are from the University of Electronic Science and Technology of China, Kyushu University and University of Tokyo.

The research study received funding from CityU, the Research Grants Council of Hong Kong SAR, the National Natural Science Foundation of China and the Science, Technology and Innovation Commission of Shenzhen Municipality.

By Marvellous Iwendi.

Source: CityU