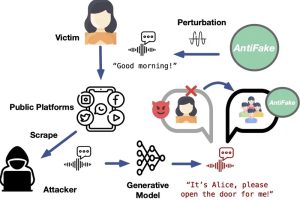

The emergence of deepfakes -where synthesized speech can be used to mimic machine and human voices for malicious purposes- is a serious problem in the current world. The advances in generative artificial intelligence were geared towards improving personalized voice assistants.

Ning Zhang, Assistant Professsor of computer science and engineering at the Washington University in St. Louis, in response to this threat, created the AntiFake, a state-of-the-art defense mechanism developed to prevent unauthorized speech synthesis before it even happens.

Contrary to customary deepfake detection techniques which generally analyze and reveal synthetic audio after the attack has already occurred, AntiFake is more proactive. It uses adversarial methods to prevent the synthesis by making it considerably more difficult for AI tools to read the required features from voice recordings.

‘AntiFake makes sure that when we put voice data out there, it’s hard for criminals to use that information to synthesize our voices and impersonate us. The tool uses a technique of adversarial AI that was originally part of the cybercriminals’ toolbox, but now we’re using it to defend against them. We mess up the recorded audio signal just a little bit, distort or perturb it just enough that it still sounds right to human listeners, but it’s completely different to AI,’ said Zhang.

To ensure AntiFake’s ability to stand up to ever-evolving threats, Zhang and first author Zhiyuan Yu, created the tool with the feature of generalization and tested it against five advanced speech synthesizers. The tool had a 95% protection rate. AntiFake’s usability was tested and analyzed with 24 human participants to confirm that the tool can be accessed by diverse populations.

AntiFake can protect short voice clips, especially effective against the most common types of voice impersonation. Zhang however explained that it could be improved to protect longer recordings or music.

‘Eventually, we want to be able to fully protect voice recordings. While I don’t know what will be next in AI voice tech-new tools and features are being developed all the time- I do think our strategy of turning adversaries’ techniques against them will continue to be effective. AI remains vulnerable to adversarial perturbations, even if the engineering specifics may need to shift to maintain this as a winning strategy,’ said Zhang.

By Marvellous Iwendi

Source: Newsroom