Researchers at DeepMind AI in London have demonstrated that artificial intelligence has the ability to create shortcuts in a fundamental mathematical calculation, by translating the problem into a game, and using the machine learning techniques used to beat human players in games like chess and Go.

The AI designed algorithms that can break records for computational efficiency. The findings of the team was published on 5th October in Nature.

‘It is very impressive. This work demonstrates the potential of using machine learning for solving hard mathematical problems,’ said Martina Seidl, computer scientist at Johannes Kepler University in Austria.

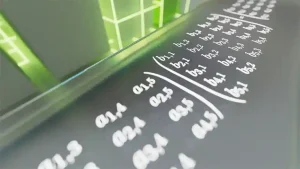

AlphaTensor—the AI developed by DeepMind— was created to perform matrix multiplication which involves multiplying numbers arranged in matrices or grids which may represent sets of pixels in images, the internal workings of an artificial neural network, or the air conditions in a weather model.

In 1969, Volker Strassen discovered a way to multiply a pair of 2 x 2 matrices using seven multiplications, rather than eight, encouraging other researchers to look for more tricks.

AlphaTensor uses reinforcement learning— where an AI agent interacts with its surroundings to achieve a multistep goal, like winning a board game. If it performs well, the agent is reinforced, i.e., the internal parameters are upgraded to make success more likely.

AlphaTensor also uses a game-playing technique referred to as tree search, where the AI studies the outcomes of branching possibility when planning its next action. During the tree search, the neural network is asked to predict the most successful actions at each step. The outcomes of the games are used as feedback to make the neural network more efficient, further improving the tree search.

Each game is a one-player puzzle that begins with a 3D tensor filled in correctly. The aim is to get all the numbers to zero in the least amount of steps, choosing from a selection of allowable moves. Each move represents a calculation that combines entries from the first two matrices to create an entry in the output matrix when inverted.

The game is challenging because the agent may need to choose from trillions of moves at each step. ‘Formulating the space of algorithmic discovery is very intricate, but even harder is, how can we navigate in this space,’ said Hussein Fawzi, co-author and computer scientist at DeepMind.

The researchers showed AlphaTensor examples of successful games, to ensure that it wouldn’t be starting from scratch. When the AI found a successful series of moves, those moves were reordered so that it could learn from them.

‘It has got this amazing intuition by playing these games,’ said Pushmeet Kohli, DeepMind computer scientist.

‘AlphaTensor embeds no human intuition about matrix multiplication, so the agent in some sense needs to build its own knowledge about the problem from scratch,’ said Fawzi.

AlphaTensor’s general approach could also be used in other types of mathematical operations, like decomposing complex waves into simpler ones. ‘This development would be very exciting if it can be used in practice. A boost in performance would improve a lot of applications,’ said Virginia Vassilevska Williams, computer scientist at MIT.

‘While we may be able to push the boundaries a little further with this computational approach. I’m excited for theoretical researchers to start analyzing the new algorithms they’ve found to find clues for where to search for the next breakthrough,’ said Grey Ballard, computer scientist at Wake Forest University in Winston-Salem, North Carolina.

By Marvellous Iwendi.

Source: Nature